How Performance Reviews are being Reinvented, Refined and Perfected

Note: Republishing this post I shared on the Medium paid subscription a few years ago. This was originally written in July 2015.

Performance Reviews

At the first mention of performance reviews, everyone seems to tighten up. They’re not something anyone looks forward to. Yet the idea behind performance reviews is simple: provide feedback, coaching, and share what the results of past performance mean for an employee’s future compensation and career.

Apprehension about performance reviews often leads to half-assery on the part of managers, and dismissal from the employee’s side — which is not helpful or productive. It turns out that by being aware of the potential pitfalls, reviews are easy, productive, and beneficial.

So here’s everything you need to know to make your performance reviews simple, easy, and productive. And maybe even not terrifying. Here’s what we’ve got in this Edition of Evergreen:

The Classic Performance Review: As explained by a master of management, Andy Grove.

Performance Review Non-believers: The various pitfalls of the review system, with lessons from big companies and psychology papers.

Case Studies of Performance Reviews Reinvented: See who has innovated and built new productive systems that you can adopt.

Enjoy the read, and have fun getting smarter.

Why You Need Performance Reviews

The best place to start for most management topics is, of course with Andy Grove of Intel and his classic management book, High Output Management.

He devotes “Chapter 13: Performance Appraisal” to guidance on how to do performance reviews, and why they are so important to a manager’s toolkit. This is the best resource on the traditional performance reviews, full of fantastic insight and advice from a man who has a lifetime of lessons to teach on management.

Grove strongly believes in the importance of Performance Reviews:

The fact is that giving such reviews is the single most important form of task-relevant feedback we as supervisors can provide.

The long and short of it: if performance matters in your operation, performance reviews are absolutely necessary.

As Grove puts it, the most crucial function of performance reviews is to improve employee performance. Every other function is secondary to that.

In order to execute excellent performance reviews, we have to start with the assessment process itself:

Determining the performance of professional employees in a strictly objective manner is very difficult because there is obviously no cut-and-dried way to measure and characterize an employee’s work completely. Many jobs involve activities that are not reflected by output in the time period covered by the review.

On having distinct goals to measure against:

The biggest problem with [assessments] is that we don’t usually define what it is we want from our subordinates, and, as noted earlier, if we don’t know what we want, we are surely not going to get it.

On evaluating an employee’s output, not hypothetical future output:

One big pitfall to avoid is the “potential trap.” At all times you should force yourself to assess the performance, not the potential.

On holding managers accountable for the product of their team:

The performance rating of a manager cannot be higher than the one we would accord to his organization!

After assessing the performance, and creating the worksheet (read more about this in Grove’s book) that organizes and prioritizes the potential topics of conversation, you’re ready for the meeting where you delivery the assessment. Here’s what Grove has to say about that:

There are three L’s to keep in mind when delivering a review: Level, listen, and leave yourself out.

Level: You must level with your subordinate — the credibility and integrity of the entire system depend on your being totally frank. […]

Listen: This is what I mean by listening: employing your entire arsenal of sensory capabilities to make certain your points are being properly interpreted by your subordinate’s brain. […]

Leave yourself out: It is very important for you to understand that the performance review is about and for your subordinate. So your own insecurities, anxieties, guilt, or whatever should be kept out of it.

This chapter is stuffed full of helpful advice that we’ve just seen the tip of, so if you don’t already own High Output Management, it’s worth buying for this chapter alone.

Performance Review Non-believers

There are a lot of benefits to Performance Reviews as put forth by Andy Grove, and no doubt Intel was better for them under his instruction. However, there are many people who disagree, and believe that performance reviews are flawed, broken, and otherwise useless.

It may be that they’re only effective if executed thoroughly as they were under Grove, and the benefits quickly become harmful if they’re half-assed. Or maybe they are best in a large and heavily measured business like Intel.

Let’s look at some of the dissenting opinions and see what we can learn about the problems that they see with the traditional process:

Conflation of Performance and Compensation

Performance reviews are the medium for quite a few tasks that could be treated separately. Here are a list of issues that are commonly handled through performance reviews:

Assessing an employee’s work

Improving Performance

Motivating an employee

Providing feedback

Justifying a raise or promotion

Rewarding performance

Providing discipline

Advising on a work direction

Reinforce company culture

That’s a lot of stuff. Performance reviews are packed with content, and some messages tend to tower over others in the mind of the recipient. Especially if you have information about a raise or bonus in a conversation… they’re not likely to remember anything else that you said.

As David Maister puts it in his book, True Professionalism:

It is a truism of management that the worst possible way to give someone performance feedback (and have it accepted as constructive critique) is to save it until the end of the year and give it all at once, just at the very moment when his or her acknowledging the critique will in one way or another affect compensation.

Google has come up with an interesting solution to this problem as Lazlo Bock explains in his book about the People Operations team at Google, called ‘Work Rules!’. Thanks to Itamar Goldminz for the contribution!

Google has learned that it’s beneficial to separate these two very different concepts into totally distinct conversations — separated by a month. In November, employees have their performance reviews, with the standard conversation about areas of improvement and emphasis on good work. In December, a conversation is had about compensation and role changes.

Our Psychological Limitations to take Criticism

Judging by the literature coming from the field of Psychology, some academics have launched an all-out assault on performance reviews. Some articles read like they are determined to see them banned from the earth.

This short post from Psychology today cites a few studies that show some of the negative consequences, such as decreased productivity and loyalty to the company. Adobe even found that voluntary attrition was significantly higher in the period after performance reviews. [Thanks to Natala Constantine and Jason Evanish, respectively, for these two contributions.]

Particularly vilified by the psychologist’s perspective is the numerical ranking system, which is addressed in this post on Strategy+Business, suggested by Cecile Rayssiguier. You can see how ranking performance negatively affects people in this video:

These studies serve as a reminder of the psychological and emotional power of these conversations. Handled poorly, they can affect people for long after the meeting is over, and plant seeds of resentment and attrition.

We can see an explicit example of this from Microsoft. This article in Vanity Fair is based on interviews with numerous former executives there, who did have very clear opinions on their now-abandoned ranking system:

At the center of the cultural problems was a management system called “stack ranking.” Every current and former Microsoft employee I interviewed — every one — cited stack ranking as the most destructive process inside of Microsoft, something that drove out untold numbers of employees. The system — also referred to as “the performance model,” “the bell curve,” or just “the employee review” — has, with certain variations over the years, worked like this: every unit was forced to declare a certain percentage of employees as top performers, then good performers, then average, then below average, then poor.

The expected negative effects unfolded and amplified over the years:

By 2002 the by-product of bureaucracy — brutal corporate politics — had reared its head at Microsoft. And, current and former executives said, each year the intensity and destructiveness of the game playing grew worse as employees struggled to beat out their co-workers for promotions, bonuses, or just survival.

Microsoft’s managers, intentionally or not, pumped up the volume on the viciousness. What emerged — when combined with the bitterness about financial disparities among employees, the slow pace of development, and the power of the Windows and Office divisions to kill innovation — was a toxic stew of internal antagonism and warfare.

That is not a description of a company that any of us would like to work at, let alone be responsible for the employees at.

Rushing, Oversights, and Laziness

It’s often very tough for managers to bring themselves to deliver negative feedback. Especially 10+ times in a row. Popforms mentions this in a post:

Everybody does fine: It’s much easier to look everything over and say, “Overall, this is fine.” And it works because most employees are doing fine, and everyone would rather hear that they’re doing fine than hear that they are doing poorly.

Delivering a weak, watered-down version of the assessment does not help the employee, you, or the organization. Employees need to hear the good news, and the bad news to really learn and improve.

This is a very common affliction in the performance review process. Managers rate everyone as average, because the alternative means more work for them. If employees are rated above or below average, a justification will be required. If they’re great, now you start a promotion and growth discussion and may need to go to bat to give them a raise or bonus. If they’re poor, then you have to start a Performance Improvement Plan, which requires often detailed, noted discussions.

The problem is compounded for managers with large teams, as the time requirements compound quickly. Knowing that, lazy managers simply default to average ratings across the board.

Thanks to Caitrin McKenzie for suggesting the popforms post.

Case Studies: New Performance Reviews

Hearing these conflicting reports on the purpose, practice, and protocol of performance reviews — what do we do? How can we proceed with an intelligently designed system of assessment, feedback, and improvement that is fair and productive?

We need to review the options to improve our systems and match them to our team and our set of challenges.

That’s what some companies have done already. We can follow their journey to learn what our improvements look like. Here are some interesting experiments and findings from companies that have done some exploration.

Deloitte: ‘Reinventing Performance Management’

Deloitte has done some very interesting things with its management practices, and radically improved its performance review system. Their efforts are found in the recent article in Harvard Business Review, suggested by Jason Evanish.

The first problems Deloitte discovered in their diagnosis process were of the assessment practice. As it turns out, the rater is the main factor of the quality of the employee’s assessment:

How significantly? The most comprehensive research on what ratings actually measure was conducted by Michael Mount, Steven Scullen, and Maynard Goff and published in the Journal of Applied Psychology in 2000. Their study — in which 4,492 managers were rated on certain performance dimensions by two bosses, two peers, and two subordinates — revealed that 62% of the variance in the ratings could be accounted for by individual raters’ peculiarities of perception. Actual performance accounted for only 21% of the variance.

This led the researchers to conclude: “Although it is implicitly assumed that the ratings measure the performance of the rate, most of what is being measured by the ratings is the unique rating tendencies of the rater. Thus ratings reveal more about the rater than they do about the rate.”

Deloitte’s solution to this was ingenious. As it turns out, the rater’s aren’t wrong at the core — it’s the act of rating that creates the problem. When Deloitte restructured the survey to ask about a manager’s intention of future actions with that employee, they found that they got clear, reliable answers.

Google’s response to this problem was totally different (as explained in Work Rules!). Their solution was to have managers peer reviewing each other’s assessments, talking through assumptions and explanations to bubble up and address any biases. They also take the proactive step of educating managers about these biases before the assessment process.

And here is the overview of the solution that Deloitte deploys:

This is where we are today: We’ve defined three objectives at the root of performance management — to recognize, see, and fuel performance. We have three interlocking rituals to support them — the annual compensation decision, the quarterly or per-project performance snapshot, and the weekly check-in. And we’ve shifted from a batched focus on the past to a continual focus on the future, through regular evaluations and frequent check-ins.

The quarterly performance snapshot is fascinating and has some thoughtful details about phrasing questions, and the correct level of transparency with results. It’s certainly worth reading the full post.

Let’s dig into understanding the structure of the weekly check-ins:

Our design calls for every team leader to check in with each team member once a week. For us, these check-ins are not in addition to the work of a team leader; they are the work of a team leader. If a leader checks in less than once a week, the team member’s priorities may become vague and aspirational, and the leader can’t be as helpful — and the conversation will shift from coaching for near-term work to giving feedback about past performance.

In other words, the content of these conversations will be a direct outcome of their frequency: If you want people to talk about how to do their best work in the near future, they need to talk often.

This is such a great insight — that the content of a conversation is determined by the frequency. It’s the meeting version of ‘the medium is the message.’ This dynamic really shows the inability of yearly or even quarterly meetings to carry the weight of coaching near-term performance.

Of course, what Deloitte has really arrived at is the concept of the regular 1-on-1 meetings that Andy Grove and many others have been relying on for decades. The interesting thing here is that the way they implemented them shows the versatility and the importance of those 1-on-1 meetings.

Deloitte has some fantastic innovations here that will soon be seen spreading to new companies to be further refined and reapplied.

Atlassian’s Performance Review Renovation

Feeling the pain of all of the previously mentioned challenges associated with performance reviews, Atlassian did a full renovation to their review process with very interesting results.

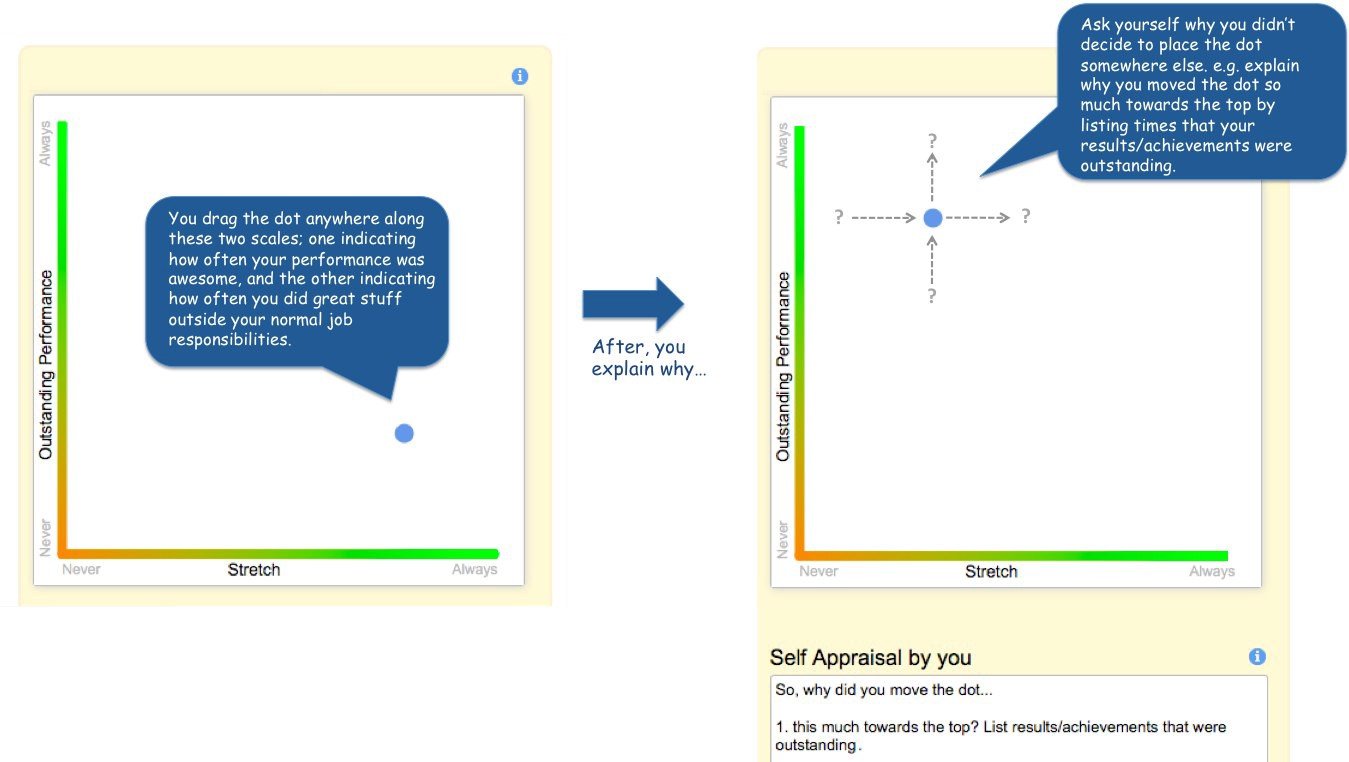

One big change was to assess performance in greater depth. Rather than a mere good/bad, they added an axis for level of challenge, and another question that respected the frequency of various behaviors.

As they changed their performance review system, it became clear to them that their compensation process had been flawed as well, and they stopped issuing bonuses that were dependent on reviews:

Instead, we gave everyone a salary bump. Similar to Netflix’s approach, we prefer to pay top market salaries rather than bonuses. However, we continued to pay an organizational bonus like before, so people will share in the company’s success.

Also, similar to Deloitte’s version, they discovered that their performance reviews were faltering due to functional overload. To correct this, they adopted a novel solution:

The thing with our traditional review was that, despite good intentions, it focused mainly on two sections: the manager rating and the employee’s weaknesses. This makes total sense as the first thing a person will be curious about is their rating (which also affects their bonus). Even if a person receives a “good” rating, most of the time will be consumed by justifying why the person didn’t get an “outstanding” rating. We wanted to introduce a lightweight and continuous model of conversations designed to remind people to — every now and then — talk about topics other than daily operational stuff.

We changed the following:

1) All sections should receive equal attention. We think that the 360 review feedback can better be discussed in a separate conversation. Same goes for performance ratings, strengths, weaknesses and career development, etc.

2) We split the sections into separate conversations with their own coaching topics. Every month we allocate one of our weekly 1:1s to a coaching topic.

This honest post by the Atlassian team about their challenges in reinventing their performance review process is well worth a read. It will give you some ideas, and prepare you for some of the challenges of pursuing your own version of this exercise.

Conclusions & Summaries

With companies as examples, we see a wider set of tools used more precisely for specific functions of management.

While they kept the yearly review structure, Google acknowledged the broken parts of the system — understanding that managers can be biased and subjective, Google has them read a handout immediately before assessing performance to attempt to rid them of bias. After the assessments, they are peer-reviewed by other managers as another check for fairness. Given the amount of possible human error in this process and the consequences of mistakes in these reviews, they are wise to put those systems in place.

Atlassian has transformed its review process into a monthly cycle of more well-defined and carefully structured conversations between managers and their employees.

And Deloitte has dissolved its formal review process into a few simple quarterly check-ins, and unstructured weekly 1-on-1 meetings.

Which of these new approaches to performance reviews is right for your team is up to you and the leaders at your company. This can be a starting point for your own journey of experimentation and improvement.

There is a lot to improve about these systems, and these companies are just starting to uncover the possibilities of what we can do to build out more robust, more fair, and more effective systems of feedback and improvement.

Let’s get to work.